The wired brain: How not to talk about an AI-powered future

The way we talk about AI is a mess. It starts with the most obvious, the imagery. Just like stock photos of happy people pointing at whiteboards were a symbol of the modern workplace, wired brains and robots have now come to represent “the AI”. But the visual messaging is only a small part of a much larger problem.

Sources: WallpaperHD, GettyImages, CodePen

Illustration is symbolic — it relies on familiarity and evokes associations and expectations. The “wired brain” is pretty straightforward in that way: Intelligence is rooted in our brains, and we want to replicate some of the decisions made by our brains using technology. As technology progresses, it’s steadily competing with our brains, becoming the ultimate extension of man. But the above imagery also sends a different message: there’s an end game, and AI development is merely the search for a key to finally unlock Artificial General Intelligence.

This narrative is misleading and deceptive, because it presents the technology as a monolithic, all-or-nothing endeavor, benchmarked against a human. It sets high expectations, but reveals very little and perpetuates the stereotype of AI as a magical black box. I think there’s actually a very simple reason for why this has been so popular. It sells well. If you have a piece of magical software, if you can make the wired brain happen, people will pay you a lot of money for it.

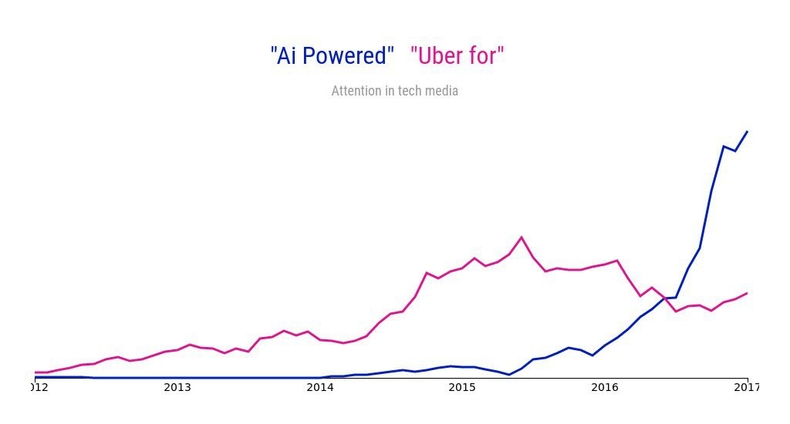

Source: 12K INDEX

The startup playbook says the hard part is figuring out what people want. Actually building it is a minor detail. In other words, the key role is “imagineering”, not engineering. Many great businesses have been built on this assumption. Now we’re simply adding AI to the mix and hoping the same will happen. The truth is, the technology is much less predictable than that, and the way we will actually make use of it in the future will be very different from how we imagine it today.

Imagineering the future

The new opportunities emerging from Machine Learning are very tempting. The wildest scenarios from decades of futuristic science fiction are suddenly (almost) possible. But does that mean they’re actually the most practical things to build? The problem here lies in how those fictional ideas were conceived. The way people imagine technology of the future is heavily biased by their current experiences and expectations.

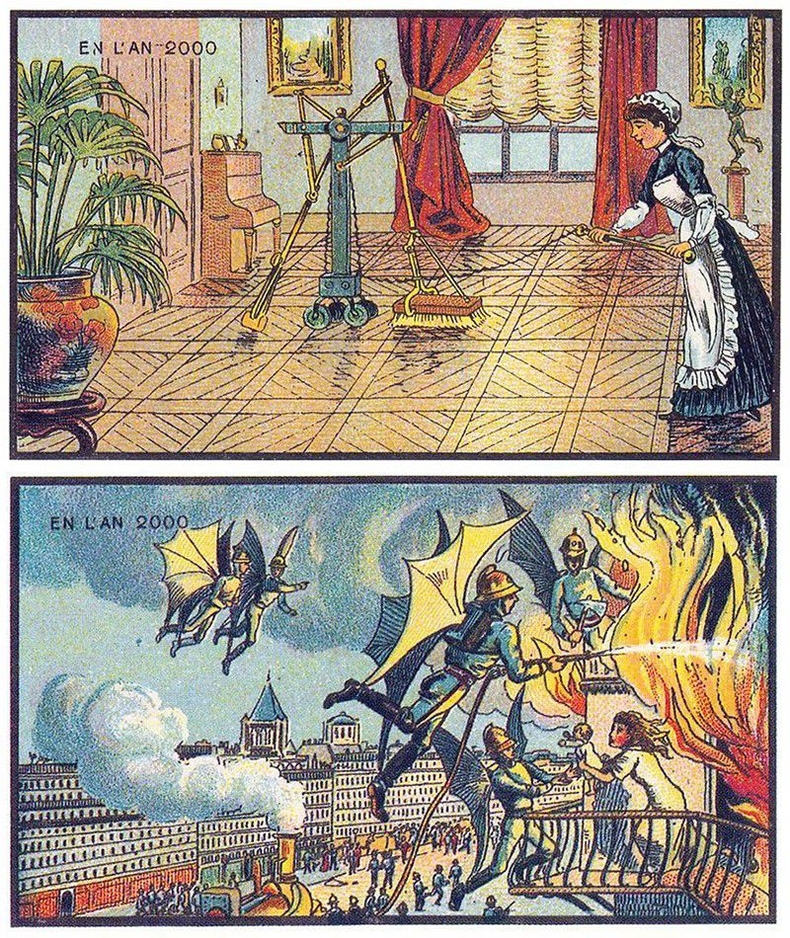

One of my favourite examples of this are these images of how people in 1900 imagined life in the year 2000:

Source: The Washington Post

Those illustrations all seem very cute and quaint, and at the same time, they’re not actually that far from our reality today. The problems depicted are still relevant – but we didn’t just automate the processes of 1900, we also reengineered the ways we were solving those problems, and our society adjusted accordingly.

We didn’t build a machine to operate a broom – we built vacuum cleaners. We didn’t engineer wings for firefighters – we built fire extinguisher drones. We also didn’t create robot personal shoppers – we built online stores.

A 1900-style broom-operating machine would have been about as useful as your average chat bot today. Kind of okay at its task once you’ve figured out how to operate it correctly and make sure it doesn’t keep crashing into walls. Completely hopeless in some cases, that also happen to be ones you’d mostly appreciate help with, like corners and door steps. After the novelty has worn off, I’m sure the diligent housewife of 1900 would have gone back to doing it properly and by hand. It took a lot of iterations, a change of methodology and a shift in people’s expectations of house cleaning to finally arrive at a practical alternative: the robot vacuum cleaner (and even that still isn’t quite perfect).

Artificial assistance

So far, we have been very successful at advancing and replacing manual labour with technology. In recent years, we have moved on to doing the same for intellectual labour. Humans have always been employing other humans to make their lives easier and more convenient. Up until the 1950s, people would hire “knocker-uppers” who’d knock on their doors and windows to wake them up in the morning. As alarm clocks became more affordable and reliable, this job became extinct (and it wasn’t replaced by a window-knocking machine).

Source: Mashable

Other jobs, like that of a personal business assistant, are a lot more complex. While we might feel like we’re “thinking outside the box” because we’re much more aware of all the technological possibilities than people were a century ago, we still accept today’s problems as given and are trying to fix them in ways that we know and are used to. We’re used to telling another human what to do and taking advantage of their humanness – their ability to reason about our instructions, retrieve all required information and perform the steps necessary to complete a task.

This has led to many attempts at making software more “human”. The assumption here is that the more human-machine interaction resembles human-human interaction, the more successful it will be. Thus, the technology needs a personality. AI is not only the technology used to build a product – it becomes the product.

But is human-human interaction really the holy grail? The reason we chose working with and talking to human assistants is because it was most efficient – just like people knocking on your window was most efficient to make sure you woke up in time. The real goal here is to enable a computer to perform the same complex tasks as a human, not replace one user interface with another. The problem with a lot of conversational applications is that they’re not actually trying to solve the underlying problem — they’re simply reengineering a makeshift solution born out of necessity. It’s not enough to apply a new technology to an existing process. It needs to be used as a tool, a building block in an entirely new system.

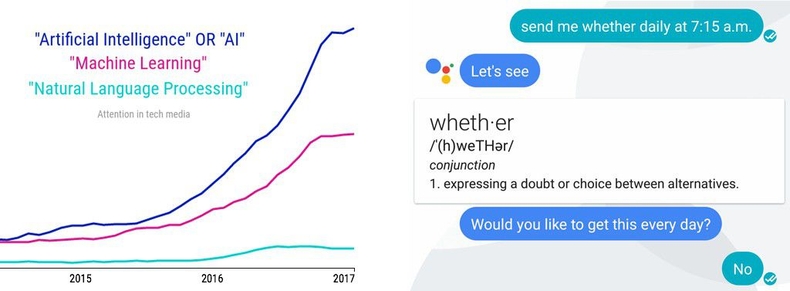

AI has a PR problem

The media is full of reports about all the great things technology is now able to do, powered by the magic of AI. New “breakthroughs” are announced almost daily. AI can turn your selfie into a van Gogh painting, dominate Poker tournaments and even drive cars. This, together with the imagery of AI as a humanoid entity, leads people to believe that we must have clearly mastered the “easy parts” and are now on to tackling the “hard parts”. What an exciting time to be alive.

Sources: 12K INDEX, @Summerson

This misleading messaging is, at least partly, to blame for the unrealistic expectations people have of consumer products, and the resulting disproportionate amounts of money thrown at business ideas that are flat-out unfeasible and incorrectly ambitious. Those ideas are usually based on the assumption that the lowest-value labour will be the easiest to automate. This worked for manufacturing, but when it comes to intelligence, this is exactly the wrong strategy.

Things that are hard for a human are often very easy for a computer, and vice versa. For example, most people can understand figures of speech without thinking twice, and are able to make fairly subtle judgements. On the other hand, we’re very limited in terms of what our physique is capable of: we’re easily exhausted, our memory is bad and when interacting with our environment, we are forced to rely on what we can hear and see. Just because something is very hard for us doesn’t automatically mean human-level performance will be difficult to replicate — quite the opposite.

Self-driving cars are possible because they use laser beams and radar sensors to generate a 360-degree map of the surroundings, and analyse this data instantly and with mathematic precision. They’re able to do something that humans can’t and achieve the goal of driving very differently. That makes them much better at it than say, a robot driver. The fact that self-driving cars are now possible does not mean that machines are becoming more “intelligent” or more “like us”. Intelligence and human capabilities are actually a very bad baseline for technology.

Whether or not something is intelligent is a fake question, because it doesn’t say anything about the capabilities themselves. Instead of trying to engineer human-like qualities and doing a bad job at it, we should use the technology for what it’s already really good at. Instead of talking about “Artificial Intelligence”, we should think of it as a Machine Learning loop of feedback and control. In “AI’s PR Problem”, Jerry Kaplan writes:

We should stop describing these modern marvels as proto-humans and instead talk about them as a new generation of flexible and powerful machines. We should be careful about how we deploy and use AI, but not because we are summoning some mythical demon that may turn against us. Rather, we should resist our predisposition to attribute human traits to our creations and accept these remarkable inventions for what they really are—potent tools that promise a more prosperous and comfortable future.

Even if you do believe that we’re close to self-improving AI and that we need to discuss safety issues and the imminent dangers ahead, you should care about making this point. Sometime in the future, you’re going to want to tell people, “It’s different now!“. You should be careful to distinguish the current technologies from the dangerous thing you’re expecting.

The way we communicate is powerful, because it shapes our perception of the world. But this also means we can use it to paint a realistic picture and correct false impressions. Messaging that promotes the technology as “the AI” is harmful and we need to fix it. And please, finally, stop using that same old fucking wired brain illustration.